Performance and Scalability Training: DrupalCon Austin

Performance and Scalability Training: DrupalCon Austin

- Initial Commands

- Installing Drupal

- Load Testing with ab

- Chart

- APC or Opcache

- Apache/PHP tuning

- MySQL Tuning

- XHProf

- Installing the PECL extension

- Installing the Drupal module

- Tuning the Views Cache

- Redis

- Varnish

- Other notes

Initial Commands

# Getting ready for Chef. apt-get update curl -L http://www.opscode.com/chef/install.sh | sudo bash apt-get -y install git-core # Running Chef. git clone https://github.com/msonnabaum/drupalcon-training-chef-repo.git cd drupalcon-training-chef-repo chef-solo -c config/solo.rb -j config/run_list.json # Downloading Drupal cd /var/www drush dl drupal

Installing Drupal

Navigate to http://${SERVER_IP}/drupal-7.28/

|

# Make a MySQL database.

mysql -uroot -p1234 -e'CREATE DATABASE drupal'

# Let Apache write the Drupal files dir and settings.php.

mkdir /var/www/drupal-7.28/sites/default/files

chown www-data /var/www/drupal-7.28/sites/default/files

cp /var/www/drupal-7.28/sites/default/{default.,}settings.php

chown www-data /var/www/drupal-7.28/sites/default/settings.php

|

Install the "Standard" install profile. Use "drupal" as the database name, "root" as the DB user, and "1234" as the DB password.

On the next installation page, set a Drupal site name, email address, username and password. Choose whatever you'd like for this, so long as you can remember it.

ab

ab is installed as part of apache, but you can install it seperate with:

yum install ab

ab -n 100 -c 5 http://localhost/drupal-7.28/

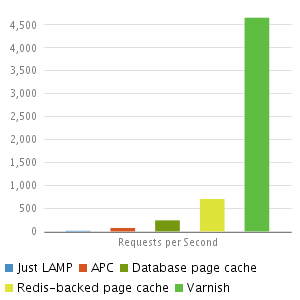

Results of load testing with changes outlined below with Drupal 7

|

Milestone

|

Requests per Second

|

|---|---|

| Bare Drupal 7.28 Install | 14.04 |

| apc.php | 75.50 |

| opcache | 95 |

| opcache + page cache -remote host | 154 |

| opcache + page cache -localhost | 689 |

| Redis | |

OPcache replaces APC for PHP 5.5 or news

Note: if you are using php 5.5 or newer there is a build in OPcache cache that is better than APC and you should use.

# yum install php_opcache

Edit /etc/php.ini and add the following:

; Enable Zend OPcache for PHP 5.5

zend_extension=opcache.so

opcache.enable=On

Other php.ini settings for OPcache (on fedora these are installed in /etc/php.d/opcache.ini for you)

; Sets how much memory to use

opcache.memory_consumption=128

;Sets how much memory should be used by OPcache for storing internal strings

;(e.g. classnames and the files they are contained in)

opcache.interned_strings_buffer=8

; The maximum number of files OPcache will cache

opcache.max_accelerated_files=4000

;How often (in seconds) to check file timestamps for changes to the shared

;memory storage allocation.

opcache.revalidate_freq=60

;If enabled, a fast shutdown sequence is used for the accelerated code

;The fast shutdown sequence doesn't free each allocated block, but lets

;the Zend Engine Memory Manager do the work.

opcache.fast_shutdown=1

;Enables the OPcache for the CLI version of PHP.

opcache.enable_cli=1

To view the status of opcache, install this php file and run from browser

cd /var/www/example.com/htdocs

wget https://raw.github.com/rlerdorf/opcache-status/master/opcache.php

Copy apc.php into place

# yum install php_pecl_apc

or

# apt-get install php-apc

# sudo pecl install apc

restart apache

#service httpd restart

run a load test

# ab -n 100 -c 5 http://drupal.org/

(remember the trailing slash or it does not go)

Report on APC memory and cache useage

|

zcat /usr/share/doc/php-apc/apc.php.gz > /var/www/drupal-7.28/apc.php

|

or

cp /usr/share/pear/apc.php /var/www/html/

Navigate to http://${SERVER_URL}/drupal-7.28/apc.php

Apache/PHP tuning

Set memory big enough to cover 100% of normal traffic, but smaller than big admistration tasks.

Total possible apache memory usage

= (Apache MaxClients * memory_limit) + apc.shm_size

= (10 * 128M) + 192M

= 1280M + 192M

= 1472M

Compression

Emable mod_gzip and optionally enable mod_deflate

php-fpm users substitute pm.max_children for MaxClients

MySQL Tuning

cd /opt

wget http://www.day32.com/MySQL/tuning-primer.sh

chmod u+x tuning-primer.sh

wget http://mysqltuner.pl -O mysqltuner.pl

chmod u+x mysqltuner.pl

Download devel

|

# Download devel

cd /var/www/drupal-7.28/

drush dl devel

drush en devel_generate

drush genu 1000

drush genc 1000

# Spider the site

wget -r --spider http://localhost/drupal-7.28/

# How much memory is MySQL using?

ps up $(pidof mysqld)

|

View the node module's schema at http://drupalcode.org/project/drupal.git/blob/refs/heads/7.x:/modules/node/node.install .

How to show a summary of a table with all its fields and indexes:

|

SHOW CREATE TABLE node\G

|

XHProf

Installing the PECL extension

|

pecl install xhprof-0.9.4

nano /etc/php5/conf.d/xhprof.ini

# Type in "extension=xhprof.so" to that file and save it.

apache2ctl restart

php -m | grep -i xhprof

|

Installing the Drupal module

|

drush dl XHProf

drush -y en xhprof

|

Tuning the Views Cache

Caching the query results can result in things being possibly out of date.

You can set the query results to never cache but still cache the rendered output. The rendered output is keyed on the contents of the result set itself.

More info on XHProf magic functions is available at http://msonnabaum.github.io/xhprof-presentation/#13 .

Redis

sudo yum install redis

sudo service redis enable

sudo service redis start

sudo pecl install redis

Restart web services (service httpd restart).

Make sure you install Redis module version version 7.x-2.6! Versions later than that have a regression that cause it not to work with $conf['page_cache_without_database'] = TRUE .

drush dl redis-2.6

drush en redis

Enabling Redis caching

Edit /var/www/drupal-7.28/sites/default/settings.php and add the following at the bottom.

|

// Use Redis for all caching.

$conf['redis_client_interface']='PhpRedis';

$conf['cache_backends'][]='sites/all/modules/redis/redis.autoload.inc';

$conf['cache_default_class']='Redis_Cache';

// Also use a Redis-backed lock backend.

$conf['lock_inc']='sites/all/modules/redis/redis.lock.inc';

// Skip the database for cache hits.

$conf['page_cache_invoke_hooks'] = FALSE;

$conf['page_cache_without_database'] = TRUE;

|

Varnish

Disable Drupal's internal page cache when using varnish:

|

if (!class_exists('DrupalFakeCache')) {

$conf['cache_backends'][] = 'includes/cache-install.inc';

}

// Rely on the external cache for page caching.

$conf['cache_class_cache_page'] = 'DrupalFakeCache';

|

Varnish with multiple backends

Defining multiple backends

First of all, you will need to define the different backends that Varnish will rely on. In the example above, we have 3 VMs with each a private IPv4 in the 10.0.0.0/24 range.

At the beggining of your VCL, set your backends :

backend vm1 {

.host = "10.0.0.11";

.port = "80";

.connect_timeout = 6000s;

.first_byte_timeout = 6000s;

.between_bytes_timeout = 6000s;

}

backend vm2 {

.host = "10.0.0.12";

.port = "80";

.connect_timeout = 6000s;

.first_byte_timeout = 6000s;

.between_bytes_timeout = 6000s;

}

backend vm3 {

.host = "10.0.0.13";

.port = "80";

.connect_timeout = 6000s;

.first_byte_timeout = 6000s;

.between_bytes_timeout = 6000s;

}

Using the appropriate backend

To define which backend (local HTTP server) should be used by Varnish to respond HTTP requests, we will set a few custom rules in the vcl_recv section of our VCL config file :

# Default backend is set to VM1

set req.backend = vm1;

if (req.http.host == "www.myhost2.com") {

set req.backend = vm2;

}

if (req.http.host == "www.myhost3.com") {

set req.backend = vm3;

}

Now restart Varnish and try to connect to one of the 3 hostnames : you should be forwarded to the appropriate backend.

Varnish to cache content from several servers - 3.0.5

Varnish to cache content from several servers - 4.0.1

Varnish Architect Article

You're Doing It Wrong

Other notes

Dries Keynote

History of camera

Log stash - Howard @Tizzin

Agent – inputs, filters, outputs (like unix pipes on steroids)

It is Ruby (Java) so it takes a minute to start, and will take 10 MG to run, there is a version written in C that is called lumberjack or Log forwarder

Log files, Logstash Shipper, Redis Queue, Logstash indexer

Logstash Inputs (search for in google)

Output to a Redis Queue

Take from Redis Queue and put into ElasticSearch

ElasticSearch

Download and run, has good documentation

Kibana connects to elasticsearch BUT Graylog2 is better than Kibana

Monitoring, sensu sensuapp.org way better than Nagios See my post “Provisioning Sensu with Puppet”.

Logstash includes support for grok

Github.com/tizzo/vagrant-logstash-gra

Logstalgia to show cool screen for monitoring

Instructors

Mark Sonnabaum - Acquia

Steve Merrill - Phase2

David Strauss - Pantheon

Dan Reif - BlackMesh - Director of Emerging Technologies