Install single node Hadoop on CentOS 7 in 5 simple steps

First install CentOS 7 (minimal) (CentOS-7.0-1406-x86_64-DVD.iso)

I have download the CentOS 7 ISO here

### Vagrant Box

You can use my vagrant box voor a default CentOS 7, if you are using virtual box

|

1

2

3

|

$ vagrant init malderhout/centos7

$ vagrant up

$ vagrant ssh

|

### Be aware that you add the hostname “centos7″ in the /etc/hosts

127.0.0.1 centos7 localhost localhost.localdomain localhost4 localhost4.localdomain4

### Add port forwarding to the Vagrantfile located on the host machine. for example:

config.vm.network “forwarded_port”, guest: 50070, host: 50070

### If not root, start with root

|

1

|

$ sudo su

|

### Install wget, we use this later to obtain the Hadoop tarball

|

1

|

$ yum install wget

|

### Disable the firewall (not needed if you use the vagrant box)

|

1

|

$ systemctl stop firewalld

|

To add a port to the firewall use:

sudo firewall-cmd --zone=public --permanent --add-port=9000/tcp

We install Hadoop in 5 simple steps:

1) Install Java

2) Install Hadoop

3) Configurate Hadoop

4) Start Hadoop

5) Test Hadoop

1) Install Java

### install OpenJDK Runtime Environment (Java SE 7)

|

1

|

$ yum install java-1.7.0-openjdk

|

2) Install Hadoop

### create hadoop user

|

1

|

$ useradd hadoop

|

### login to hadoop

|

1

|

$ su - hadoop

|

### generating SSH Key

|

1

|

$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

|

### authorize the key

|

1

|

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

|

### set chmod

|

1

|

$ chmod 0600 ~/.ssh/authorized_keys

|

### verify key works / check no password is needed

|

1

2

|

$ ssh localhost

$ exit

|

### download and install hadoop tarball from apache in the hadoop $HOME directory

|

1

2

|

$ wget http://www.carfab.com/apachesoftware/hadoop/common/hadoop-2.5.0/hadoop-2.5.0.tar.gz

$ tar xzf hadoop-2.5.0.tar.gz

|

3) Configurate Hadoop

### Setup Environment Variables. Add the following lines to the .bashrc

export JAVA_HOME=/usr/lib/jvm/jre

export HADOOP_HOME=/home/hadoop/hadoop-2.5.0

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

### initiate variables

|

1

|

$ source $HOME/.bashrc

|

### Put the property info below between the “configuration” tags for each file tags for each file

### Edit $HADOOP_HOME/etc/hadoop/core-site.xml

|

1

2

3

4

|

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

|

### Edit $HADOOP_HOME/etc/hadoop/hdfs-site.xml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

|

### copy template

|

1

|

$ cp $HADOOP_HOME/etc/hadoop/mapred-site.xml.template $HADOOP_HOME/etc/hadoop/mapred-site.xml

|

### Edit $HADOOP_HOME/etc/hadoop/mapred-site.xml

|

1

2

3

4

|

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

|

### Edit $HADOOP_HOME/etc/hadoop/yarn-site.xml

|

1

2

3

4

|

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

|

### set JAVA_HOME

### Edit $HADOOP_HOME/etc/hadoop/hadoop-env.sh and add the following line

export JAVA_HOME=/usr/lib/jvm/jre

4) Start Hadoop

# format namenode to keep the metadata related to datanodes

|

1

|

$ hdfs namenode -format

|

# run start-dfs.sh script

|

1

|

$ start-dfs.sh

|

# check that HDFS is running

# check there are 3 java processes:

# namenode

# secondarynamenode

# datanode

|

1

|

$ start-yarn.sh

|

# check there are 2 more java processes:

# resourcemananger

# nodemanager

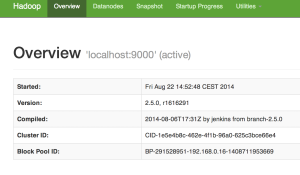

5) Test Hadoop

### access hadoop via the browser on port 50070

### put a file

|

1

2

3

|

$ hdfs dfs -mkdir /user

$ hdfs dfs -mkdir /user/hadoop

$ hdfs dfs -put /var/log/boot.log

|

### check in your browser if the file is available

Works!!! See also https://github.com/malderhout/hadoop-centos7-ansible